Blog

All Blog Posts | Next Post | Previous Post

Automatic invoice data extraction in Delphi apps via AI

Automatic invoice data extraction in Delphi apps via AI

Monday, July 28, 2025

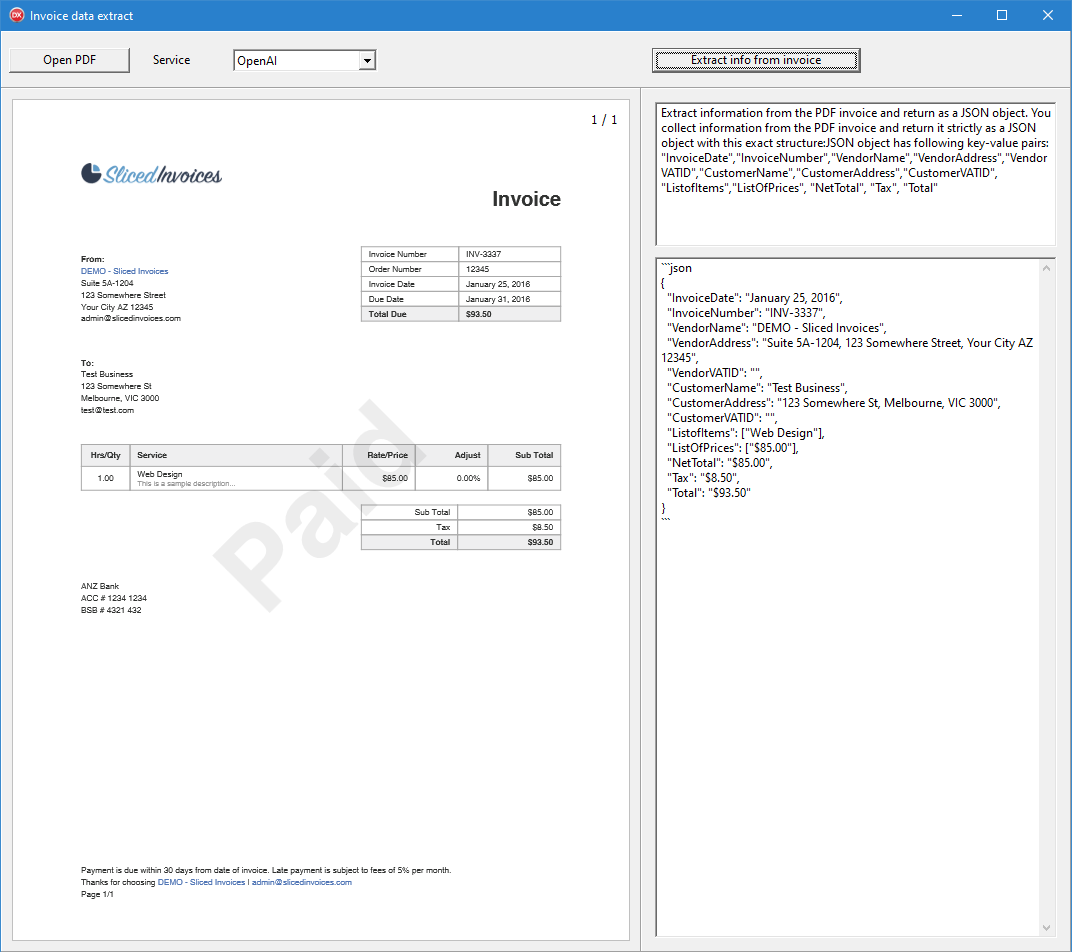

In this short example, we want to show you how you can add with a minimal amount of effort automatic invoice data extraction to a Delphi app.

This is realized with the TMS AI Studio TTMSMCPCloudAI component and in this example, taking advantage of 3 possible LLMs: OpenAI, Gemini or Claude.

How it works?

It is actually quite simple how to achieve this. We use the TTMSMCPCloudAI to send the PDF invoice and a prompt and we get in return a JSON object with the data we wanted to extract!

An essential part is providing a good prompt. We have seen good results from the following prompt setup:

TMSMCPCloudAI1.Context.Text := 'Extract information from the PDF invoice and return as a JSON object'; TMSMCPCloudAI1.SystemRole.Text := 'You collect information from the PDF invoice and return it strictly as a JSON object with this exact structure: JSON object has following key-value pairs: "InvoiceDate","InvoiceNumber","VendorName","VendorAddress","VendorVATID","CustomerName","CustomerAddress","CustomerVATID", "ListofItems","ListOfPrices", "NetTotal", "Tax", "Total";'

You can see that we use the system role description where we specify what the expected output format is of the JSON object containing the critical invoice data. But combining everything in the context also turned out to be working. The main prompt is then just to "Extract information from the PDF invoice and return as a JSON object". The system role is used to specify what exact information we want from the invoice and as what key-value pairs it should be returned in the invoice.

begin TMSMCPCloudAI1.UploadFile(INVOICEPDF, aiftPDF); end;

We the upload is complete, i.e. after the event OnFileUpload is triggered, we can send the prompt with

begin

TMSMCPCloudAI1.Execute('process_invoice');

end;begin

TMSMCPCloudAI1.AddFile(INVOICEPDF, aiftPDF);

TMSMCPCloudAI1.Execute('process_invoice');

end;To select the LLM to be used for this operation is as sample as:

TMSMCPCloudAI.Service := aiClaude

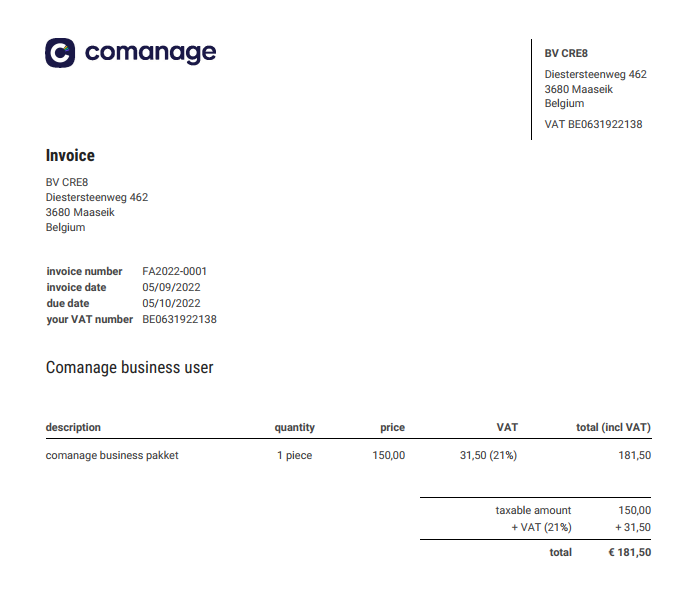

{

"InvoiceDate": "05/09/2022",

"InvoiceNumber": "FA2022-0001",

"VendorName": "BV CRE8",

"VendorAddress": "Diestersteenweg 462, 3680 Maaseik, Belgium",

"VendorVATID": "BE0631922138",

"CustomerName": "Comanage business user",

"CustomerAddress": "",

"CustomerVATID": "BE0631922138",

"ListofItems": ["comanage business pakket"],

"ListOfPrices": [150.00],

"NetTotal": 150.00,

"Tax": 31.50,

"Total": 181.50

}This is available as a VCL application. Note that it uses the TAdvPDFViewer component to show a preview of the PDF file selected. The TAdvPDFViewer component is part of TMS VCL UI Pack. The sample project download also includes 3 sample PDF files for your testing.

Conclusion

Bruno Fierens

Related Blog Posts

-

Add AI superpower to your Delphi & C++Builder apps part 1

-

Add AI superpower to your Delphi & C++Builder apps part 2: function calling

-

Add AI superpower to your Delphi & C++Builder apps part 3: multimodal LLM use

-

Add AI superpower to your Delphi & C++Builder apps part 4: create MCP servers

-

Add AI superpower to your Delphi & C++Builder apps part 5: create your MCP client

-

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

-

Introducing TMS AI Studio: Your Complete AI Development Toolkit for Delphi

-

Automatic invoice data extraction in Delphi apps via AI

-

AI based scheduling in classic Delphi desktop apps

-

Voice-Controlled Maps in Delphi with TMS AI Studio + OpenAI TTS/STT

-

Creating an n8n Workflow to use a Logging MCP Server

-

Supercharging Delphi Apps with TMS AI Studio v1.2 Toolsets: Fine-Grained AI Function Control

-

AI-powered HTML Reports with Embedded Browser Visualization

-

Additional audio transcribing support in TMS AI Studio v1.2.3.0 and more ...

-

Introducing Attributes Support for MCP Servers in Delphi

-

Using AI Services securely in TMS AI Studio

-

Automate StellarDS database operations with AI via MCP

-

TMS AI Studio v1.4 is bringing HTTP.sys to MCP

-

Windows Service Deployment Guide for the HTTP.SYS-Ready MCP Server Built with TMS AI Studio

-

Extending AI Image Capabilities in TMS AI Studio v1.5.0.0

-

Try the Free TMS AI Studio RAG App

This blog post has received 15 comments.

2. Monday, July 28, 2025 at 7:32:17 PM

I haven’t tested but from looking at the technical description of APIs, Gemini and OpenAI allow to upload batches of files and specify multiple files to process by the prompt. But I don’t see why one by one processing would be problematic. The prompt token count is very small anyway versus the token count of the invoice PDF:

2. Monday, July 28, 2025 at 7:32:17 PM

I haven’t tested but from looking at the technical description of APIs, Gemini and OpenAI allow to upload batches of files and specify multiple files to process by the prompt. But I don’t see why one by one processing would be problematic. The prompt token count is very small anyway versus the token count of the invoice PDF:

Bruno Fierens

3. Tuesday, July 29, 2025 at 9:47:36 AM

Hello,

3. Tuesday, July 29, 2025 at 9:47:36 AM

Hello,Would it work on other types of documents ?

What would the time requiered to read 25.000 pages ?

Does this requiere to have a specific OpenAI or other licensing fee ?

Best regards

Julien ALBRECHT

4. Tuesday, July 29, 2025 at 11:28:52 AM

Afaik, these 3 services support out of the box text files, CSV files, MD files, HTML files other than PDF.

4. Tuesday, July 29, 2025 at 11:28:52 AM

Afaik, these 3 services support out of the box text files, CSV files, MD files, HTML files other than PDF. To use OpenAI, you need an OpenAI API key. (Same applies for Gemini & Claude)

We haven’t done performance testing on so many files. In our testing, one invoice took a couple of seconds maximum.

Bruno Fierens

5. Tuesday, July 29, 2025 at 1:18:54 PM

Nice and impressive demo but should only be done with self hosted local LLMs if used in EU because of general data protection regulations

5. Tuesday, July 29, 2025 at 1:18:54 PM

Nice and impressive demo but should only be done with self hosted local LLMs if used in EU because of general data protection regulations

Suer Martin

6. Thursday, July 31, 2025 at 8:48:40 PM

Suer Martin - Your understanding of GDPR leaves a lot to be desired ! This has little or nothing to do with personal data.

6. Thursday, July 31, 2025 at 8:48:40 PM

Suer Martin - Your understanding of GDPR leaves a lot to be desired ! This has little or nothing to do with personal data. Edwards Peter

7. Thursday, July 31, 2025 at 10:14:15 PM

Based on your needs, when working with AI, you will find it is best to create a process to pull page by page and process each page to ensure you don''t hit token limits on each request. Three page extraction takes about 30-45 seconds total on my end for 3 page documents we are processing (but pulling more information then what is on the invoice). Track the response for each page request and combine the results in an array in the end if needed.

7. Thursday, July 31, 2025 at 10:14:15 PM

Based on your needs, when working with AI, you will find it is best to create a process to pull page by page and process each page to ensure you don''t hit token limits on each request. Three page extraction takes about 30-45 seconds total on my end for 3 page documents we are processing (but pulling more information then what is on the invoice). Track the response for each page request and combine the results in an array in the end if needed.

Paul Stohr

8. Thursday, July 31, 2025 at 11:34:43 PM

With local models under Ollama, you can do it too, but there will need to be an extra step of extracting each page of the PDF as an image and then using that instead, because there''s no way to send a PDF to Ollama, it only accepts images.

8. Thursday, July 31, 2025 at 11:34:43 PM

With local models under Ollama, you can do it too, but there will need to be an extra step of extracting each page of the PDF as an image and then using that instead, because there''s no way to send a PDF to Ollama, it only accepts images.

Alexander Pastuhov

9. Friday, August 1, 2025 at 3:55:10 AM

So does this also work on Ollama?

9. Friday, August 1, 2025 at 3:55:10 AM

So does this also work on Ollama?Does it matter when an invoice contains more than one page, or will the returned JSON string still contain everything needed as extracted from a multi-page invoice? Or is there a recommended alternative way to detect multi-page invoices and prompt the AI differently for the desired JSON string?

van Rensburg Robert

10. Friday, August 1, 2025 at 7:32:26 AM

This example does not work directly as-is with Ollama as at this moment, Ollama has no built-in support to natively directly use PDF file parsing. You’d need to do the parsing upfront and either provide the invoice as textual data to the model used by Ollama or extract as image and use a visual model with Ollama.

10. Friday, August 1, 2025 at 7:32:26 AM

This example does not work directly as-is with Ollama as at this moment, Ollama has no built-in support to natively directly use PDF file parsing. You’d need to do the parsing upfront and either provide the invoice as textual data to the model used by Ollama or extract as image and use a visual model with Ollama. At this moment, OpenAI, Gemini and Claude can directly work with PDF files.

Bruno Fierens

11. Thursday, August 7, 2025 at 2:28:34 PM

Hi,

11. Thursday, August 7, 2025 at 2:28:34 PM

Hi,if i buy the tms ai to process my invoice, do we have a charge pour AI for each pdf invoice i process?

thank you

Josee Letarte

12. Friday, August 8, 2025 at 11:34:14 AM

This is really great, thanks for providing real live examples. I''m just concerned about data "privacy" and do not feel confortable to send our invoices to "free to use" AI.

12. Friday, August 8, 2025 at 11:34:14 AM

This is really great, thanks for providing real live examples. I''m just concerned about data "privacy" and do not feel confortable to send our invoices to "free to use" AI.Will it be hard to add Copilot AI ? Also how complicated is it to create the same project but working with local Ollama + llama vision 3.2 model ?

stephane.wierzbicki

13. Friday, August 29, 2025 at 12:51:27 PM

All very nice and very interesting. But how do we manage the privacy and security of data sent to AI services?

13. Friday, August 29, 2025 at 12:51:27 PM

All very nice and very interesting. But how do we manage the privacy and security of data sent to AI services?I myself am building a data recognition service by analyzing identity documents or passports.

But as with invoices, the REAL problem is: How can we manage these processes with AI in complete security?

Obviously using local or standalone (private) AI services.

But are these services as capable or powerful as the more renowned and well-known online ones? How many resources are needed?

Unfortunately, despite having excellent tools available, the real issue to address is this one involving sensitive data, before writing code.

Great job TMS!

Stefano Monterisi

14. Friday, August 29, 2025 at 2:13:54 PM

Different cloud LLMs have different policies.

14. Friday, August 29, 2025 at 2:13:54 PM

Different cloud LLMs have different policies.An analysis of the OpenAI ChatGTP security & privacy can be found here:

https://www.security.org/digital-safety/is-chatgpt-safe

For Anthropic Claude there is this reference as starting point:

https://privacy.anthropic.com/en/articles/10458704-how-does-anthropic-protect-the-personal-data-of-claude-ai-users

For Google Gemini see:

https://safety.google/gemini/

And then of course there is Ollama that runs locally with a local model, so there shouldn''t be a privacy or security concern if at least your internal network is properly secured.

Bruno Fierens

15. Friday, August 29, 2025 at 4:13:51 PM

Thanks, Bruno

15. Friday, August 29, 2025 at 4:13:51 PM

Thanks, Bruno

Stefano Monterisi

All Blog Posts | Next Post | Previous Post

Mike