Blog

All Blog Posts | Next Post | Previous Post

Creating an n8n Workflow to use a Logging MCP Server

Creating an n8n Workflow to use a Logging MCP Server

Thursday, August 14, 2025

The Model Context Protocol (MCP) has revolutionized how AI agents interact with external data sources and tools. With the newest version of TMS AI Studio, we now have Streamable HTTP Transport support, enabling us to easily host MCP servers anywhere and access them from multiple clients simultaneously. This opens up exciting possibilities for building distributed AI workflows.

In this blog post, we'll showcase this capability by creating a comprehensive logging workflow in n8n that connects to our MCP Server to process data and generate visual charts. This demonstrates how modern AI tooling can work together to create powerful, automated data processing pipelines.

What is n8n?

n8n is a powerful, open-source workflow automation platform that enables you to connect different services and automate complex business processes. Think of it as "IFTTT for developers" but with much more sophisticated capabilities.

Key Features of n8n:

- Visual Workflow Builder: Drag-and-drop interface for creating complex automation flows

- 400+ Integrations: Connect to popular services like Slack, Google Sheets, databases, and AI models

- Agentic Flows: Support for AI agents that can make decisions and take actions autonomously

- Self-Hosted: Full control over your data and workflows

- Extensible: Custom nodes and integrations

Setting up your n8n.io account

Getting started with n8n is straightforward:

- Cloud Option: Visit n8n.io and sign up for a cloud account

- Self-Hosted: Use Docker or npm to run locally

- Import Our Workflow: Download our pre-built workflow JSON from our repository

Getting started with our sample workflow & our public MCP server

Our public MCP server is hosted via http://88.198.69.227:8934/mcp. Fwiw, it can also be consumed by an MCP client you build yourself using TMS AI Studio or any other MCP client.

Consume from n8n cloud:

You can use the our sample MCP server from your n8n cloud account righ-away. You can import our complete logging workflow using this JSON file - simply copy the contents and paste it into n8n's workflow import feature.

Consume from local self-hosted n8n:

Follow the steps in this n8n article to host n8n.io locally on your machine via docker and then proceed by importing the same JSON file in your locally hosted solution.

Setup: Build our sample MCP Logging Server yourself

Our MCP Server is built using Delphi and the TMS AI Studio components, specifically designed to demonstrate the power of the new Streamable HTTP Transport. You can download the full source code here. Here's what makes it special:

Server Capabilities

The server provides comprehensive logging and analytics tools:

get-daily-logs: Retrieves logs for specific dates with optional filteringget-logs-by-method: Analyzes request patterns grouped by method typeget-log-stats: Provides overall statistics including success rates and performance metricssimulate-work: Generates test data for different work types (CPU, I/O, network)test-tool: Runs various test scenarios for quality assurance

Implementing the streamable HTTP Transport

The beauty of our implementation lies in the setup and how easy it is to change your already existing MCP Servers to now use Streamable HTTP. Simply use the following code to integrate it in your application.

// Create HTTP transport with custom endpoint FHTTPTransport := TTMSMCPStreamableHTTPTransport.Create(nil, FPort, '/mcp'); FMCPServer.Transport := FHTTPTransport; // Configure server FMCPServer.ServerName := 'MCPLoggingServer'; FMCPServer.ServerVersion := '1.0.0';

This configuration allows:

- Remote Access: Server can be hosted anywhere and accessed via HTTP

- Multiple Clients: Support for concurrent connections

- Stateful Sessions: Persistent connections with session management

- SSL Support: Optional HTTPS encryption for production deployments

Use the following commands to run your MCP Server:

# Default configuration (port 8080) StreamableLoggerDemo.exe # Custom port and database StreamableLoggerDemo.exe -p 8934 -d custom_logs.db # Generate sample data StreamableLoggerDemo.exe -s

http://localhost:8934/mcp (or your configured port).Create the n8n Workflow

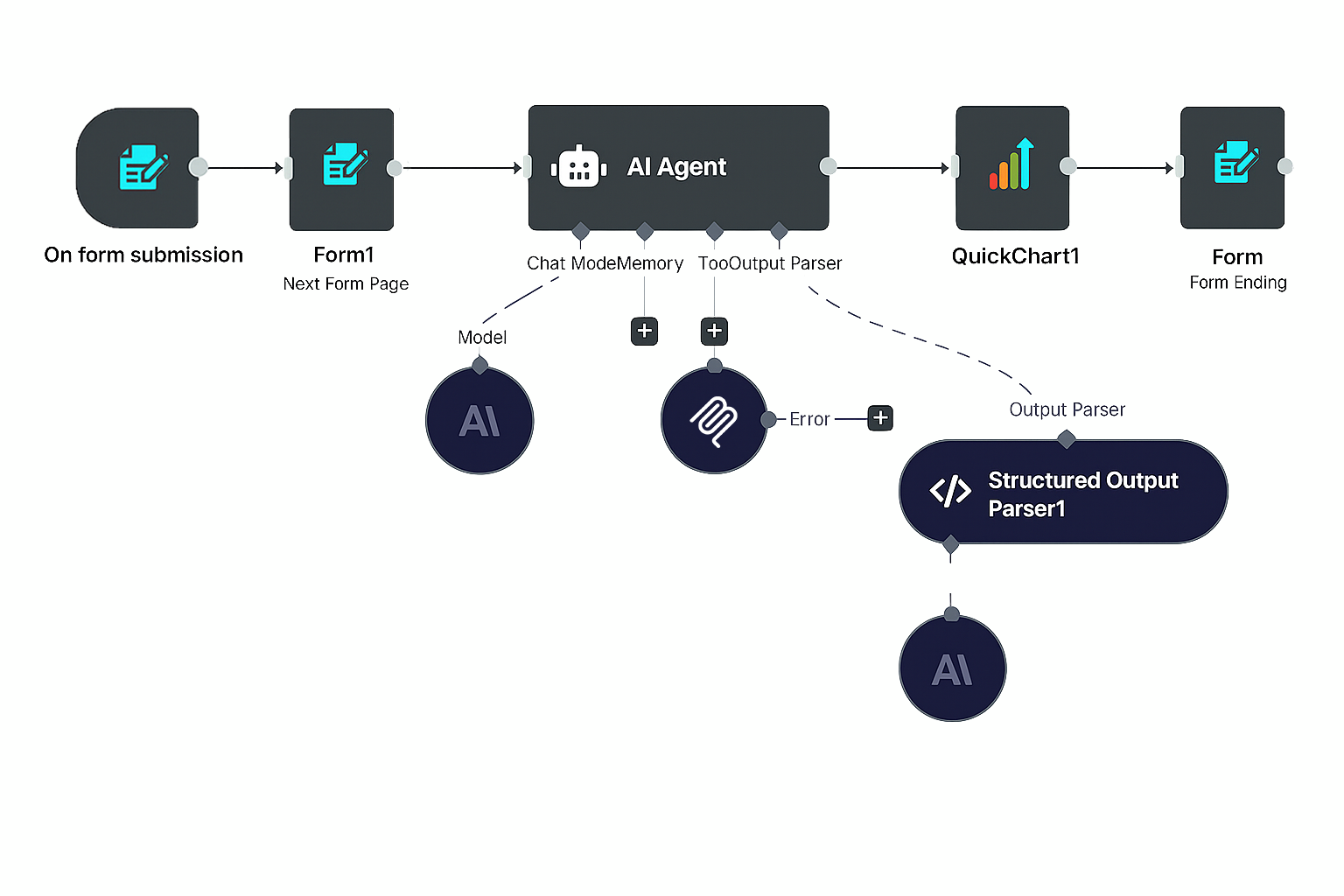

Our n8n workflow demonstrates a complete agentic flow that intelligently processes logging data and creates visualizations. Let's break down the key components:

Essential Nodes Configuration

1. Form Trigger Node

- Purpose: Creates a web form interface for user input

- Configuration:

- Form Title: "Check logging Stats"

- Description: "Check logging stats for your MCP server"

- Important: Note the webhook URL generated - this becomes your workflow endpoint

2. MCP Client Node

This is where the magic happens. Configure it with:

- Endpoint URL:

http://host.docker.internal:8934/mcp(or use http://88.198.69.227:8934/mcp to use our public mcp server) - Server Transport:

httpStreamable - Tools Selection: Include the specific tools you want to use:

get-daily-logsget-logs-by-methodget-log-stats

Important Configuration Notes:

- Use

host.docker.internalif running n8n in Docker and your MCP server locally - For cloud n8n, ensure your MCP server is publicly accessible

- The

serverTransport: httpStreamablesetting is crucial for the new transport

3. AI Agent Node

Configure your AI model connection:

- Model: Claude Sonnet 4 or Claude Opus 4

- Prompt Type: Define custom prompts

- Output Parser: Structured output for chart generation

Key Configuration: Set up your Anthropic API credentials in n8n's credential manager.

4. Structured Output Parser

Ensures the AI agent returns properly formatted data for chart generation:

5. QuickChart Node

Generates beautiful charts from the processed data:

- Chart Type: Dynamic based on AI agent output

- Labels: Extracted from data analysis

- Data: Processed metrics from MCP server

Where to Put Your API Keys

Critical Setup: You'll need to configure credentials in n8n:

- Navigate to: Settings → Credentials

- Add Anthropic API:

- Name: "Anthropic account"

- API Key: Your Anthropic API key

- MCP Server:

- No authentication required for local development

- For production, implement proper authentication in your MCP server

Workflow Logic Flow

- User Input → Form submission triggers the workflow

- AI Analysis → Agent analyzes the prompt and determines which MCP tools to use

- Data Retrieval → MCP Client calls appropriate logging tools

- Data Processing → AI agent processes the raw data into chart-ready format

- Visualization → QuickChart generates the final visualization

- Response → User receives an interactive chart

Running the Sample

Prerequisites Checklist

- MCP Logging Server running (default port 8934)

- n8n instance running (cloud or local)

- Anthropic API key configured

- Workflow imported and saved

Testing the Workflow

- Start Your MCP Server or use the public one already configured:

StreamableLoggerDemo.exe -p 8934 -s

- Execute the Workflow:

- Access the form trigger URL in your browser

- Enter a prompt like: "Show me the success rate by method as a pie chart"

- Submit and watch the magic happen!

- Example Prompts to Try:

- "Create a bar chart showing request counts by day"

- "Show me response time trends as a line chart"

- "Display error rates by method type"

- "Generate a pie chart of work simulation types"

Conclusion

This integration demonstrates the powerful synergy between modern AI tools and traditional software development. By combining:

- TMS AI Studio's MCP Server with Streamable HTTP Transport

- n8n's workflow automation capabilities

- AI agents for intelligent data processing

- Dynamic visualization generation

We've created a system that can automatically analyze complex logging data and present insights in an intuitive visual format. This pattern can be extended to numerous other use cases:

- Business Intelligence: Automated report generation

- DevOps Monitoring: Intelligent alerting and diagnostics

- Data Analysis: Automated insight discovery

- Quality Assurance: Automated testing and reporting

The Streamable HTTP Transport opens up new architectural possibilities, allowing MCP servers to be truly distributed services that can scale independently and serve multiple clients simultaneously.

Next Steps: Try building your own MCP server with different data sources, experiment with other n8n integrations, and explore the growing ecosystem of MCP-compatible tools.

Start Building the Future Today

The AI revolution is here, and with TMS AI Studio, Delphi developers are perfectly positioned to be at the forefront. Whether you're building intelligent business applications, creating AI-powered tools, or exploring the possibilities of the Model Context Protocol, TMS AI Studio gives you everything you need to succeed.

Ready to transform your development process?

Starting at 225 EUR for a single developer license, TMS AI Studio offers exceptional value for the comprehensive AI development toolkit it provides.

Join the growing community of developers who are already building tomorrow's AI applications with TMS AI Studio. The future of intelligent software development starts here.

TMS AI Studio requires Delphi 11.0 or higher.

Bradley Velghe

Related Blog Posts

-

Add AI superpower to your Delphi & C++Builder apps part 1

-

Add AI superpower to your Delphi & C++Builder apps part 2: function calling

-

Add AI superpower to your Delphi & C++Builder apps part 3: multimodal LLM use

-

Add AI superpower to your Delphi & C++Builder apps part 4: create MCP servers

-

Add AI superpower to your Delphi & C++Builder apps part 5: create your MCP client

-

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

-

Introducing TMS AI Studio: Your Complete AI Development Toolkit for Delphi

-

Automatic invoice data extraction in Delphi apps via AI

-

AI based scheduling in classic Delphi desktop apps

-

Voice-Controlled Maps in Delphi with TMS AI Studio + OpenAI TTS/STT

-

Creating an n8n Workflow to use a Logging MCP Server

-

Supercharging Delphi Apps with TMS AI Studio v1.2 Toolsets: Fine-Grained AI Function Control

-

AI-powered HTML Reports with Embedded Browser Visualization

-

Additional audio transcribing support in TMS AI Studio v1.2.3.0 and more ...

-

Introducing Attributes Support for MCP Servers in Delphi

-

Using AI Services securely in TMS AI Studio

-

Automate StellarDS database operations with AI via MCP

-

TMS AI Studio v1.4 is bringing HTTP.sys to MCP

-

Windows Service Deployment Guide for the HTTP.SYS-Ready MCP Server Built with TMS AI Studio

-

Extending AI Image Capabilities in TMS AI Studio v1.5.0.0

-

Try the Free TMS AI Studio RAG App

This blog post has received 1 comment.

All Blog Posts | Next Post | Previous Post

Devine Robert