Blog

All Blog Posts | Next Post | Previous Post

AI based scheduling in classic Delphi desktop apps

AI based scheduling in classic Delphi desktop apps

Monday, August 4, 2025

In the evolving landscape of software development, the fusion of classic Delphi desktop applications with cutting-edge AI capabilities is no longer a futuristic dream—it's a reality. Thanks to TMS AI Studio, developers can now harness the incredible power of Large Language Models (LLMs) like OpenAI, Claude, Gemini, Grok, Mistral, Ollama and DeepSeek directly in their VCL or FMX apps.

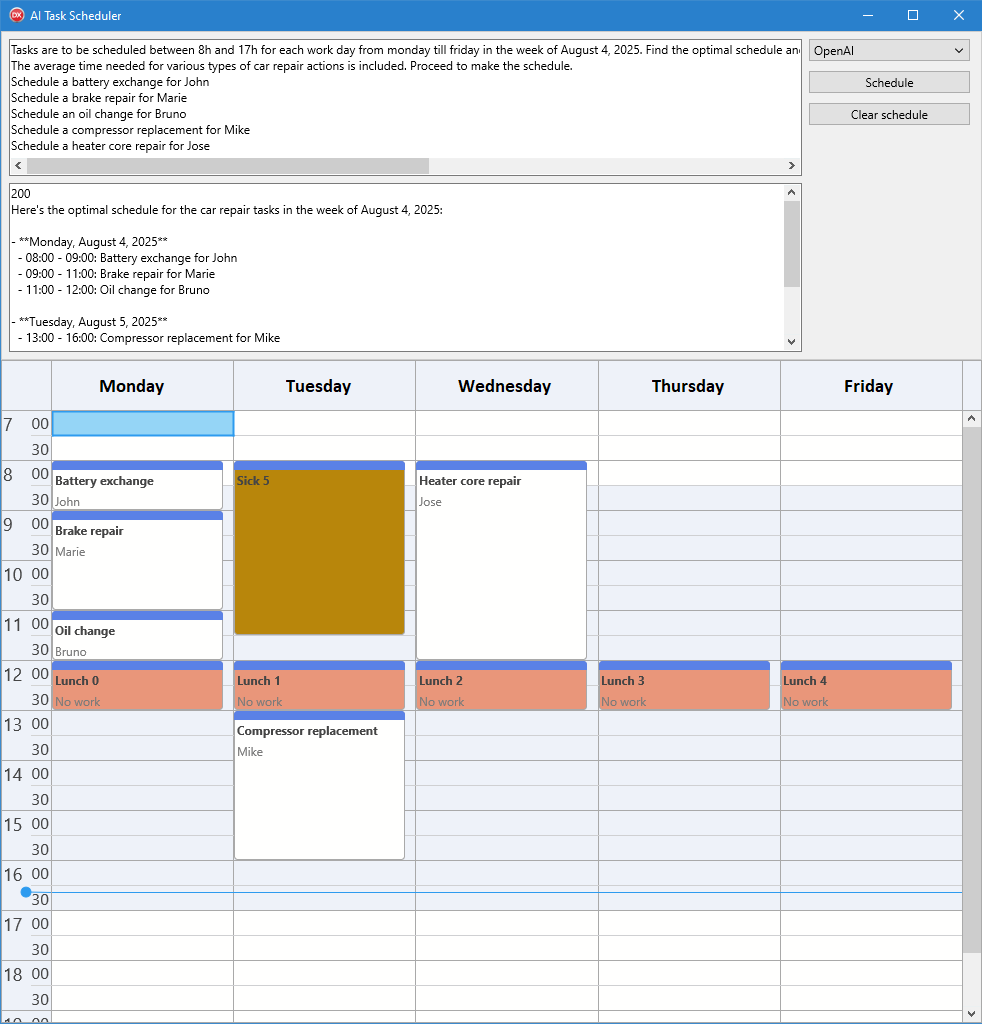

Today, we're excited to showcase a real-world Delphi application that does something remarkably useful: it automatically schedules car repair tasks in a workshop, leveraging LLM function calling, prompt engineering, and real-time file-based context injection. This isn't just an upgrade—it's a leap forward in how we approach automation and intelligent assistance in desktop software.

The Power Behind the App: Delphi + LLM + TMS AI Studio

This application uses the TTMSMCPCloudAI component from the TMS AI Studio suite to interact seamlessly with a variety of LLM providers. It enables:

-

Dynamic selection of the AI service (OpenAI, Claude, Gemini, etc.)

-

Injection of a custom car maintenance knowledge file (

carmaintenance.txt) -

Setting up function calls such as

AddSchedule,GetCurrentTime, andGetOccupiedListto be called automatically from the LLM -

Ask the LLM for intelligent task scheduling that respects existing calendar constraints

-

Our visual TMS FNC Planner component showing booked and available time slots

Here's a quick glance at what happens when the user clicks the "Execute" button:

With this simple code, the LLM receives a prompt along with real-world context in the form of a domain-specific file. The file carmaintenance.txt is a text file the contains typical repairs to be executed at a car repair shop and the approximate time needed for these repairs. (For this example, the overview of typical car maintenance actions and time required was actually also obtained from Grok AI). Now the AI doesn't guess—it knows.

What LLMs Bring to the Table

The integration with TMS AI Studio enables LLM function calling, meaning the AI can intelligently decide when to call a Delphi procedure (like adding a schedule) and with what parameters. This is defined clearly via TTMSMCPCloudAI in the Delphi app using tools like:

Here we have defined the AddSchedule function to allow the LLM to add an item to our visual TMS FNC Planner. When the LLM decides to call this function, the event OnExecute for this tool will be triggered and this is the place where we add the Delphi code to actually interact with the Planner component and add an item. The code is as following:

In a similar way, we have setup function calling to get the actual time, so the LLM know what is our current time where maintenances can be scheduled as well as to get a list of already occupied times in the Planner.

This is the tool setup for it:

And this is the handler. It loops through all items in the TMS FNC Planner and returns an array of start and end times of existing scheduled items in the Planner:

From Natural Language to Concrete Schedule

Imagine a user types:

"Book an oil change for Mr. Smith on Tuesday morning."

The app:

-

Sends this to the LLM along with the existing schedule and maintenance guide.

-

The LLM parses the request, checks for conflicts using

GetOccupiedList, and finally callsAddSchedule. -

Delphi handles this via the

DoHandleAddScheduleevent and updates the calendar UI.

This is where classic meets futuristic. The app feels like a smart assistant, not a rigid form-based tool.

Why This Matters

Delphi developers can now:

-

Extend existing apps with AI-assisted logic

-

Build intelligent tools without rewriting in Python or JavaScript

-

Use local or cloud-hosted LLMs depending on need and privacy

And thanks to TMS AI Studio, this is done using drag-drop components and well-known Delphi patterns—no need to leave your comfort zone.

The Journey Continues: Tuning, Testing & Experimenting

No two LLMs are alike. Some handle temporal logic better, others prefer verbose instruction. This means:

-

You experiment with different LLM providers

-

You refine your prompts over time

-

You observe what works best for your users and domain

TMS AI Studio makes this easy. Just change the combo box and you're switching providers on the fly! Your application logic remains 100% the same, regardless of LLM you want to integrate with:

Demo app

You can download the fulll code of this demo app here. Request the API keys for the LLMs you want to experiment with. For this application, we have also used the TMS FNC Planner, so, make sure you have other than TMS AI Studio, also TMS FNC UI Pack installed. The demo app is created with the FireMonkey framework and thus is cross-platform. But you can as well use all these components in an existing Windows 32bit or 64bit VCL application.

It is interesting to see how different LLMs handle the scheduling in a different way. Surely, with a more precise prompt, things can be streamlined. But with the default prompt, it is remarkable that Claude for example will add 30min extra time with each scheduled maintenance as a sort of safety. OpenAI was quite reliable and consistent. Gemini on the other side often decides to ignore the working hours or lunch, mainly because it tends to use the maintenance task worst case time, whereas OpenAI and Claude tend to take the best possible time. All in all, these are very interesting experiments that surface particularities of the different LLMs and their models!

Conclusion

With TMS AI Studio, the age of intelligent Delphi apps is here. You can now build applications that feel responsive, context-aware, and almost human in their interactions—all within the familiar and powerful world of native Delphi development.

Whether you're scheduling car repairs, managing bookings, or building custom tools for logistics, TMS AI Studio + LLMs will give your app superpowers.

Bruno Fierens

Related Blog Posts

-

Add AI superpower to your Delphi & C++Builder apps part 1

-

Add AI superpower to your Delphi & C++Builder apps part 2: function calling

-

Add AI superpower to your Delphi & C++Builder apps part 3: multimodal LLM use

-

Add AI superpower to your Delphi & C++Builder apps part 4: create MCP servers

-

Add AI superpower to your Delphi & C++Builder apps part 5: create your MCP client

-

Add AI superpower to your Delphi & C++Builder apps part 6: RAG

-

Introducing TMS AI Studio: Your Complete AI Development Toolkit for Delphi

-

Automatic invoice data extraction in Delphi apps via AI

-

AI based scheduling in classic Delphi desktop apps

-

Voice-Controlled Maps in Delphi with TMS AI Studio + OpenAI TTS/STT

-

Creating an n8n Workflow to use a Logging MCP Server

-

Supercharging Delphi Apps with TMS AI Studio v1.2 Toolsets: Fine-Grained AI Function Control

-

AI-powered HTML Reports with Embedded Browser Visualization

-

Additional audio transcribing support in TMS AI Studio v1.2.3.0 and more ...

-

Introducing Attributes Support for MCP Servers in Delphi

-

Using AI Services securely in TMS AI Studio

-

Automate StellarDS database operations with AI via MCP

-

TMS AI Studio v1.4 is bringing HTTP.sys to MCP

-

Windows Service Deployment Guide for the HTTP.SYS-Ready MCP Server Built with TMS AI Studio

-

Extending AI Image Capabilities in TMS AI Studio v1.5.0.0

-

Try the Free TMS AI Studio RAG App

This blog post has received 7 comments.

2. Tuesday, August 5, 2025 at 4:49:30 PM

A good experiment indeed. We''ll keep it in mind for a next demo.

2. Tuesday, August 5, 2025 at 4:49:30 PM

A good experiment indeed. We''ll keep it in mind for a next demo.

Bruno Fierens

3. Thursday, August 7, 2025 at 11:40:31 AM

I’m truly amazed. The possibilities with TMS AI feel infinite — absolutely brilliant and inspiring.

3. Thursday, August 7, 2025 at 11:40:31 AM

I’m truly amazed. The possibilities with TMS AI feel infinite — absolutely brilliant and inspiring.It’s like I’ve traveled back to when I was 13, discovering the magic of computers for the first time — from the Commodore 64 and BASIC to the world of PCs, Delphi, databases, image display, and audio playback.

Now, with TMS AI, that same excitement is alive again — but with superpowers I could only dream of back then!

Mehrdad Esmaili

4. Thursday, August 28, 2025 at 2:04:39 PM

Hello. I''m a big fan of TMS WEB Core for Visual Studio—it''s awesome! How does TMS AI Studio differ from TMS WEB Core and Copilot, which is a free AI service? Do we need to pay for external AI services to use TMS AI Studio in Delphi?

4. Thursday, August 28, 2025 at 2:04:39 PM

Hello. I''m a big fan of TMS WEB Core for Visual Studio—it''s awesome! How does TMS AI Studio differ from TMS WEB Core and Copilot, which is a free AI service? Do we need to pay for external AI services to use TMS AI Studio in Delphi?

Ertan Baykal

5. Thursday, August 28, 2025 at 2:28:23 PM

TMS AI Studio is designed to make it easy to use AI at application level. So, this is not for code generation but for integration into applications where applications can benefit from AI. TMS AI Studio offers access to a range of AI services, like OpenAI, Claude, Gemini, Ollama, Mistral, ... so depending on the AI service you are using, there might be a cost from the AI service for it.

5. Thursday, August 28, 2025 at 2:28:23 PM

TMS AI Studio is designed to make it easy to use AI at application level. So, this is not for code generation but for integration into applications where applications can benefit from AI. TMS AI Studio offers access to a range of AI services, like OpenAI, Claude, Gemini, Ollama, Mistral, ... so depending on the AI service you are using, there might be a cost from the AI service for it.

Bruno Fierens

6. Thursday, August 28, 2025 at 3:35:56 PM

Very interesting. Let us say I have a huge data in a TMS grid. Can It anaylse and extrac all outliners from the data set with correct promt to AI? That would be awesome to clean the data.

6. Thursday, August 28, 2025 at 3:35:56 PM

Very interesting. Let us say I have a huge data in a TMS grid. Can It anaylse and extrac all outliners from the data set with correct promt to AI? That would be awesome to clean the data.

Ertan Baykal

7. Thursday, August 28, 2025 at 3:54:53 PM

If you provide a tool to the LLM to access the grid cells, yes.

7. Thursday, August 28, 2025 at 3:54:53 PM

If you provide a tool to the LLM to access the grid cells, yes.

Bruno Fierens

All Blog Posts | Next Post | Previous Post

info