Blog

All Blog Posts | Next Post | Previous Post

Taking advantage of Google MediaPipe ML from Delphi TMS WEB Core

Taking advantage of Google MediaPipe ML from Delphi TMS WEB Core

Thursday, June 20, 2024

Implementing machine learning in your applications can be a daunting task. However, with TMS Web Core and the vast amount of available JavaScript libraries, this can be made much easier. To demonstrate this, we have created a demo using the Google MediaPipe library. This library offers a lot of different functionalities, this includes: hand, face and language detection. These functionalities can be leveraged with their pre-trained models. In this blogpost we tackled hand landmark detection and hand position tracking within a frame. The full source code of the demo we created can be found here on GitHub.

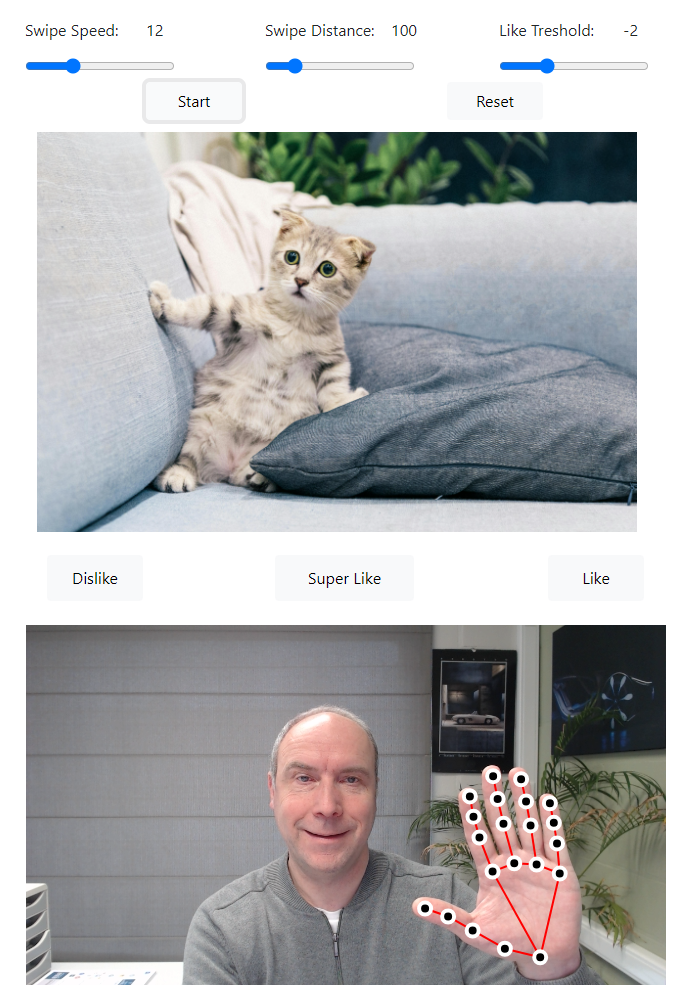

Demo

For our demo, we created a Tinder-style application where you can like, dislike or super like a pet using hand gestures. The hand gestures are as follows: swipe left to dislike, swipe right to like and bring your hand closer to the camera to super like.

First of all, we need to initialize the camera, this involves creating a new camera obect and pressing the start button to capture video input.

obj := TMediaPipeInitOBject.new; obj.width := 640; obj.height := 360; obj.onFrame := doCameraOnFrame; camera := TMediaPipeCamera.new(videoElement, Obj); camera.start;

While making the new object we set an asynchronous event that attempts to recognise hands in every frame. This can be done with the HTML element of the TWebCamera.

procedure TformMediapipe.doCameraOnFrame(AEvent: TJSEvent); begin TAwait.ExecP<JSValue>(hands.send(new(['image', videoElement]))); end;

We need to create a new object with the settings for the MediaPipe model. This configuration will determine how the hand tracking will operate.

jsObj := new([]); jsObj.Properties['selfieMode'] := 1; jsObj.Properties['maxNumHands'] := 1; jsObj.Properties['modelComplexity'] := 1; jsObj.Properties['minDetectionConfidence'] := 0.5; jsObj.Properties['minTrackingConfidence'] := 0.5;

Next, we make a hand object which reads the necessary files needed for hand recognition.

handObj := TMediaPipeInitHands.new; handObj.locateFile := function(aFile: string): string begin result := 'https://cdn.jsdelivr.net/npm/@mediapipe/hands/' + aFile; end;

After creating all necessary objects and setting the options, we set a callback that performs a function every time a hand is recognised in a frame. In this callback, we draw circles and lines to show the landmarks and skeleton of the hand. Next we use a wrapper to get the coordinates from the top of the middlefinger.

TMediaPipeCoords = class external name 'Coords' (TJSObject) x: double; y: double; z: double; end;

With these coordinates we can do the following gestures:

- Close in: When the z-value on your frame is smaller than the super like treshold.

- Swipe left or right: When the hand speed is higher thean the speed treshold and the distance between this frame and the previous frame is higher than the distance treshold.

- Left: When the current x-value minus the previous x-value is negative.

- Right: When the current x-value minus the previous x-value is positive. </li>

And just like that we successfuly implemented hand gestures in Delphi using the Google MediaPipe library. This integration allows for a more enhanced user interaction and creates a more engaging experience. The use of machine learning and hand tracking opens up numerous possibilities for developing innovative applications that respond to human gestures.

See it for yourself in this video we recorded:

Written by Niels Lefevre, intern at TMS software in school year 2023/2024.

Push your limits

Step out of your VCL development comfort zone but take all your RAD component based Object Pascal development skills to enter new exciting wonderful territories with TMS WEB Core. Get the fully functional trial version for Delphi or Visual Studio Code today!

Bruno Fierens

This blog post has received 2 comments.

2. Friday, June 21, 2024 at 11:44:48 AM

Awesome Job!

2. Friday, June 21, 2024 at 11:44:48 AM

Awesome Job!

Ere Ebikekeme

All Blog Posts | Next Post | Previous Post

Miesen Mirko