Blog

All Blog Posts | Next Post | Previous Post

Extend TMS WEB Core with JS Libraries with Andrew:

Extend TMS WEB Core with JS Libraries with Andrew:

Modern-Screenshot and FFmpeg

Thursday, April 6, 2023

Motivation.

There are a few instances where we might want to take a screenshot of our app directly while it is running. Perhaps we're creating promotional material or documentation of some kind. Or perhaps there's an issue with our app, and we'd like the user to send us a screenshot showing what the problem is. Or perhaps we'd like to get a screenshot of the error whether the user wants to report it or not.

Some bug-tracking tools can automatically include a screenshot when logging exceptions, as in traditional VCL applications. I'm a fan of EurekaLog for example, which has long offered this capability. I'm sure there are several others that do the same. It also isn't entirely unreasonable to expect users to be able to produce a screenshot when they're reporting an error. But sometimes it might be best to get at that information directly, particularly if the "error" isn't one that they might be presented with directly.

There may be scenarios where certain application conditions are met

that we might want to know about, as developers, and the extra supporting material, like a screenshot, may

help. Or perhaps our application is running in some kind of unattended kiosk mode, where there isn't an option

for errors to be reported directly, or the users wouldn't be expected to do so.

Extending the idea of screenshots a little further, there can be situations, particularly in those kiosk applications, where it might be informative to capture a number of screenshots over a period of time. Maybe a

short time at a higher frequency, to monitor a particular type of usage. Or maybe screenshots collected over a

much longer time at a considerably lower frequency to monitor application state transitions that might not

normally be readily apparent. To record this kind of information, having a video showing the period of time in

question can be just as helpful, if not more helpful, than a folder full of hundreds of screenshots.

Regardless of the rationale behind the desire to capture screenshots or video, getting these tools,

particularly FFmpeg itself, to work entirely within our web application presents a few interesting technical

challenges. Solving those can help set the stage for quite an array of additional capabilities in our projects.

Note also that there could be security or privacy considerations when collecting this kind of data. However,

it is likely to be less of an issue because, well, this is done entirely within our app, so we can control

everything already anyway. And, importantly, we can't really see anything outside our app. Even the content of <iframe>

elements is blocked, which might be more appropriate in some settings than something else that captures the actual screen

output. In other words, we can't really do anything with this approach that we couldn't already do otherwise.

Any concerns about the privacy or security implications would also apply to the rest of our application. Something to keep in mind.

Screenshots.

The ability to capture all or part of a browser page and store it as an image is not a new capability. In

fact, we've even covered it in our blog posts previously. In our first Labels

and Barcodes post (which we'll be getting back to shortly), we used the very capable dom-to-image

JavaScript library to do the same thing - converting a block of HTML, containing a label, into an image. In

fact, dom-to-image and modern-screenshot are both descendants of other similar JavaScript libraries. For this

post, modern-screenshot was chosen primarily because it is one of the more recently updated JavaScript libraries

claiming to do the same thing - converting all or part of the HTML DOM to an image format.

For our example in this post, we're going to start by adding the ability to capture screenshots and videos to our ongoing Catheedral project. But don't worry about that - there's nothing particularly special here (aside from the screenshots of course) that is specific to that project - this is all pretty well compartmentalized into just a few key areas. Easily adaptable to other projects, as we'll see.

To start with, as usual, we'll need links to the JavaScript

libraries, either by editing our Project.html file or by using the Manage JavaScript Libraries functionality within the

Delphi IDE. The usual JSDelivr CDN links are as follows.

<!-- Modern Screenshot -->

<script src="https://cdn.jsdelivr.net/npm/modern-screenshot@4.4.9/dist/index.min.js"></script>

<!-- ffmpeg -->

<script src="https://cdn.jsdelivr.net/npm/@ffmpeg/ffmpeg@0.11.6/dist/ffmpeg.min.js"></script>To capture a screenshot, what we're ultimately going to end up with is a PNG file that is Base64-encoded and stored as a DataURI string. We'll convert it later into a binary PNG file, but for now, this makes it pretty easy to work with. We can even output this string to the browser console while we're working on the code. All we're interested in doing initially is capturing an image whenever a TWebTimer event fires. The newly captured image is just added to an array we've called CaptureData that is defined as a JSValue in the Form.

procedure TForm1.tmrCaptureTimer(Sender: TObject);

begin

asm

// Maximum capture - 1800 frames (30m @ 1ps, > 1d at 1fpm)

if (pas.Unit1.Form1.CaptureData.length <= 1800) {

modernScreenshot.domToPng(document.querySelector('body')).then(dataURI => {

pas.Unit1.Form1.CaptureData.push(dataURI);

btnRecord.innerHTML = '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-dot fa-fw ps-1 me-2 fa-xl"></i>Recording frame #'+pas.Unit1.Form1.CaptureData.length+'</div>'

});

}

end;

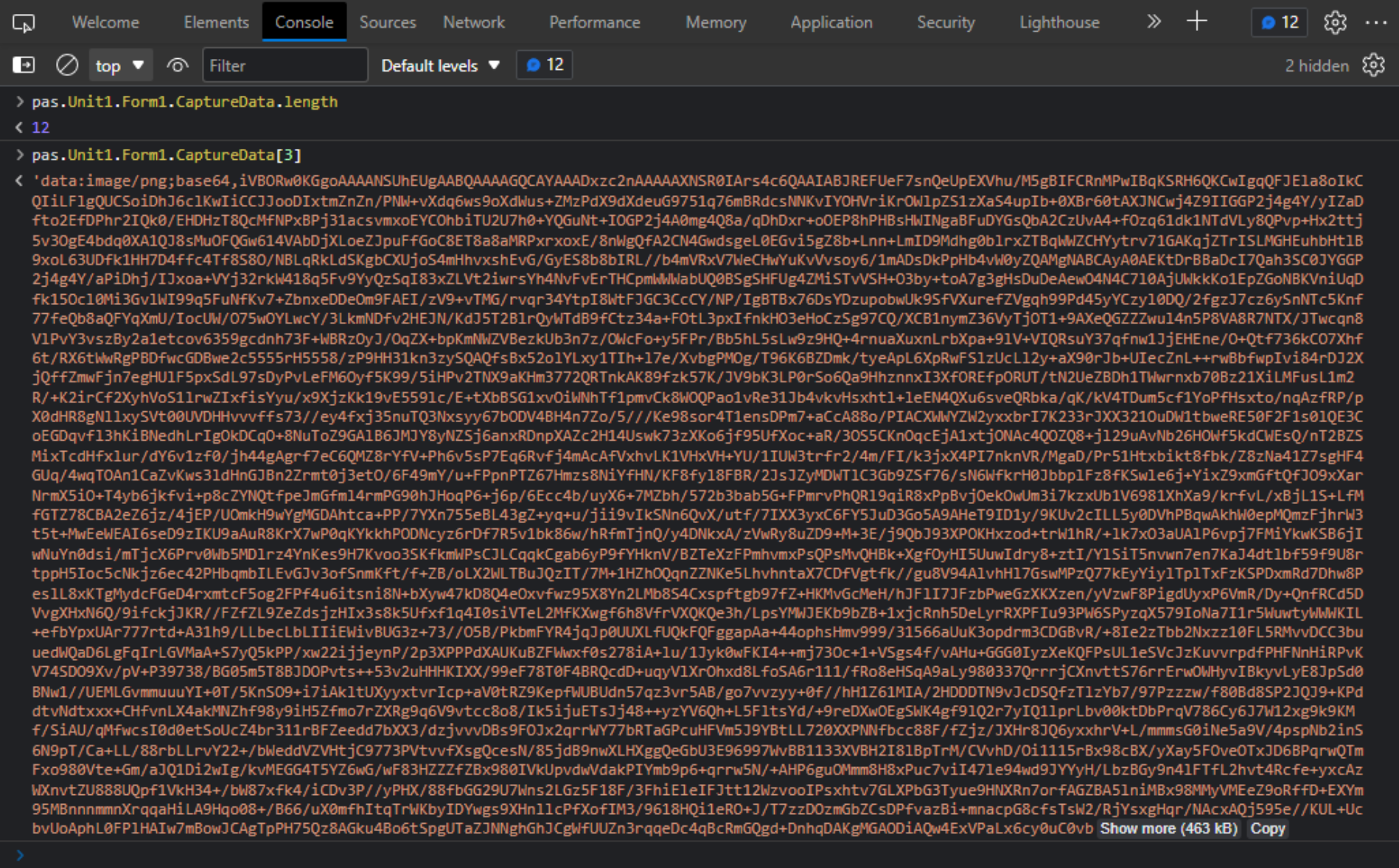

end;Every time this timer event fires, a screenshot is generated and then pushed onto the CaptureData array. If we want to look at its contents, we can do it in the browser developer tools. Here's what that looks like after a few images have been captured.

Screenshots as DataURI.

Note that this can generate quite a lot of data - this is a small screenshot (1280px x 400px) and it is already

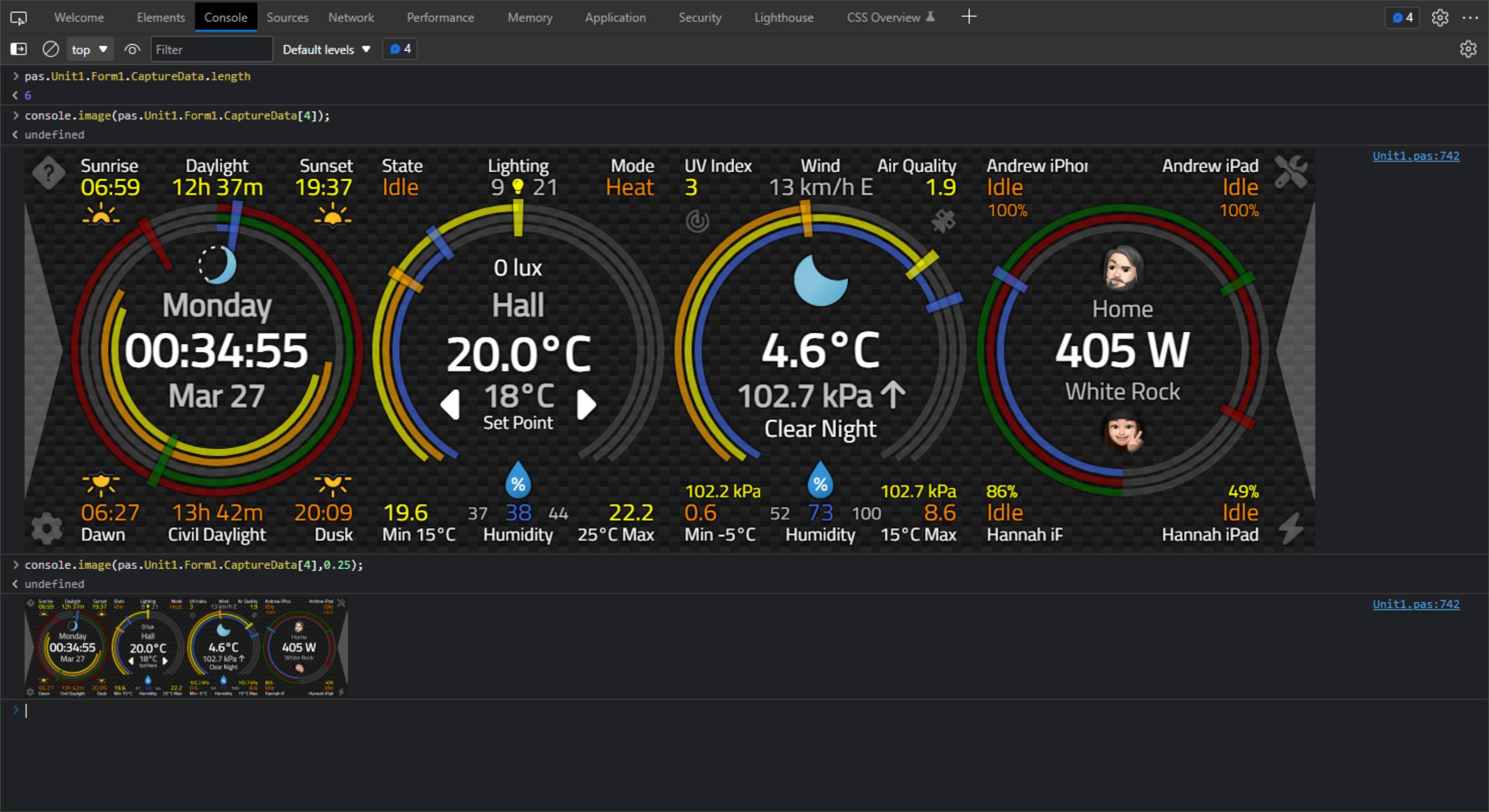

400kb+ per image. What if we want to see the image in the console? Tricky, that. But possible. Here's a bit

of code that we can add to our application that will give us a "console.image" function that we can use

elsewhere. We can also just copy and paste this directly into the console and enable it that way. But then

we'd have to do it each time.

The original version of this (attribution)

seemed to create double-height images for some reason, so I've adjusted it, but perhaps check the source if you

encounter different behavior. This can be added to FormCreate or wherever else JavaScript functions might be

initialized in your project. Note also that console.image() works in Chrome and Edge but doesn't work in Firefox. Maybe it could be adapted, but I didn't pursue it any further.

function getBox(width, height) {

return {

string: "+",

style: "font-size: 1px; padding: " + Math.floor(height/4) + "px " + Math.floor(width/2) + "px; line-height: " + height/2 + "px;"

}}

console.image = function(url, scale) {

scale = scale || 1;

var img = new Image();

img.onload = function() {

var dim = getBox(this.width * scale, this.height * scale);

console.log("%c" + dim.string, dim.style + "background: url(" + url + "); background-size: " + (this.width * scale) + "px " + (this.height * scale) + "px; color: transparent;");

};

img.src = url;

}

With those functions defined, we can now do something like this. The first parameter to console.image() is the

DataURI, and the second (optional) parameter is the scale.

Screenshots Displayed in Console.

So that's pretty fun, isn't it? It sure is! We could even add this to our timer event to show the images in

the console automatically as they're generated, at least while we're getting started here.

procedure TForm1.tmrCaptureTimer(Sender: TObject);

begin

asm

// Maximum capture - 1800 frames (30m @ 1ps, > 1d at 1fpm)

if (pas.Unit1.Form1.CaptureData.length < 1800) {

modernScreenshot.domToPng(document.querySelector('body')).then(dataURI => {

console.image(dataURI,0.5);

pas.Unit1.Form1.CaptureData.push(dataURI);

btnRecord.innerHTML = '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-dot fa-fw ps-1 me-2 fa-xl"></i>Recording frame #'+pas.Unit1.Form1.CaptureData.length+'</div>'

});

}

end;

end;

Fun with CSS, HTML, and CORS.

Alright, that sure looked easy, didn't it? Well, it can be that easy, but it is likely that there will be a few bumps along the way. What modern-screenshot and similar JavaScript libraries are typically doing, internally, is rendering a clone of the selected DOM element (the whole page in our example) into an HTML <canvas> element, and then extracting an image from it.

And as soon as an HTML <canvas> element enters the scene (in my

opinion, anyway!) it's time to check that we have plenty of Ibuprofen on hand for the ensuing inevitable

headaches. In this case, we've got a few problems. In our example above, these have all been addressed, but

they may be lurking in your project, so have a quick look if you find that your screenshot images do not match what your page normally looks like.

- Initially, most of the issues are likely to do with CORS. And that's a topic that can cause even more

headaches than <canvas> elements! The problem here is that when the modern-screenshot library goes to

fetch content to render in the <canvas> element, it is likely going to make calls to the original CSS

libraries. These may reside on remote servers that are expecting to serve data a certain way, and which

may complain about these extra requests, issuing more CORS errors than anyone ever needs to see. The quick

fix is to add a new attribute to the various stylesheets in our Project.html file - the crossorigin

attribute. For example, if you use Font Awesome in your projects, those icons may not appear in the generated

screenshots. To correct it, we can update the link like this.

<link href="https://cdn.jsdelivr.net/npm/@fortawesome/fontawesome-free@6/css/all.min.css" rel="stylesheet"

crossorigin="anonymous"

>

Just adding the crossorigin attribute for all the <link> stylesheets in the project will likely cover quite a number of problems, but not all of them.

- For example, this didn't work for the Material Design Icons that are used in the Catheedral project, which

are in turn what Home Assistant uses. This was just a regular WebFont collection of icons that we've been

using for quite a while in the Catheedral project. No amount of fiddling seemed to get them to appear in our screenshots. But there's more than one way to add Material Design Icons to a project, and they strongly

discourage using the WebFont approach anyway. So instead, they were replaced with the Iconify SVG version of

the icons. Which wasn't exactly a trivial change, but not terrible. Essentially, we were using icons like this.

<span class="mdi mdi-home-assistant"><span>

And under the new system, we have to use them like this.

<iconify-icon icon="mdi:home-assistant"></iconify-icon>

This change had to be made in about a dozen places. So not terrible, but in the end, this is likely a better approach anyway. And just like that, the icons appeared in the screenshots without any further problems.

- Even with all that taken care of, some of the images were not being rendered properly - specifically the

Person photos on the home page. This is seemingly a CORS issue with the Home Assistant server, so some

adjustments were made there to compensate for this. I would rate my personal capacity for dealing with CORS

as somewhere below "extremely low" and "non-existent" but fortunately there are settings within Home Assistant

that seem to address this. As is often the case with Home Assistant documentation, this tends to be a hit-or-miss affair. Two changes seem to have resolved the issue. First, in the Home Assistant configuration.yaml,

this extra bit was needed.

http:

cors_allowed_origins:

- '*'And in our project, we also needed to add the crossorigin attribute to the images themselves.

divPerson1.ElementHandle.firstElementChild.setAttribute('crossorigin','anonymous');

This seems to be working still, several days later, though sometimes the image is now not rendered properly in the actual application. A reload tends to fix it but I'm not sure whether this is a proper fix or a poorly applied band-aid.

- Other rendering problems may also crop up. The JavaScript library is essentially re-applying all the CSS on

the page when generating the HTML <canvas> element, and sometimes things don't go according to plan.

One example in the Catheedral project was that the borders around the input elements on the Configuration page

were just missing. They're rendered fine in the actual app, but were not rendered in the generated

screenshot. The workaround, in this case, was to apply border properties to the elements directly rather than

just relying on the CSS.

- The <input> elements (like TWebEdit for example) also initially displayed the placeholder text instead of

the actual entered values. To get around this, the "record" button first goes through these and assigns the

TWebEdit.Text property to the element's "value" attribute. Presumably, the rendering mechanism doesn't look

anywhere else other than the HTML or CSS, so if something isn't present in one of those places, it isn't

included. Or maybe it is just a bug.

- Similarly, there was a problem with a block of text that normally would wrap automatically to fit into a set

amount of space. In the screenshot, though, this wrapping never occurred. An explicit width was added to the

element, which didn't affect the normal display but did fix the wrapping in the screenshot. The thought that

comes to mind here is that the browser "flex" mechanism is perhaps more current than whatever is being used by

the screenshot code... ...somehow. Or maybe it is also just a bug.

- By design, anything in an <iframe> will not be rendered. This is a limitation of the way this all

works. Nothing to be done about it really. In the Catheedral project, this means that some of the recent

additions - the RainViewer satellite and radar weather pages, for example, are not rendered. The Leaflet

mapping library, on the other hand, isn't rendered through an <iframe> so it actually works great, after

updating all the icons of course.

Another big consideration here is that it does take time for this rendering to happen. This ultimately means that it is unlikely that a very high framerate is going to be possible - maybe manage expectations for something around 1 frame per second to be on the safe side. This is very different from, say, a desktop screenshot/video capturing solution, where those applications might have access to the underlying operating system window buffer/bitmap contents, where no HTML/CSS rendering is required. This means those tools can achieve a much higher framerate than we can here. It also helps that they can use a more performant language than JavaScript to get the job done.

It may well be that the screenshot that is generated is rendered perfectly right out of the gate, or it

may take a bit of tweaking to get there. A lot of this depends on the complexity of the page being rendered.

The Catheedral app is likely more toward the complex end of the spectrum and thus had a few more problems than

might be normally expected. Ultimately, though we should end up with an array of screenshot images.

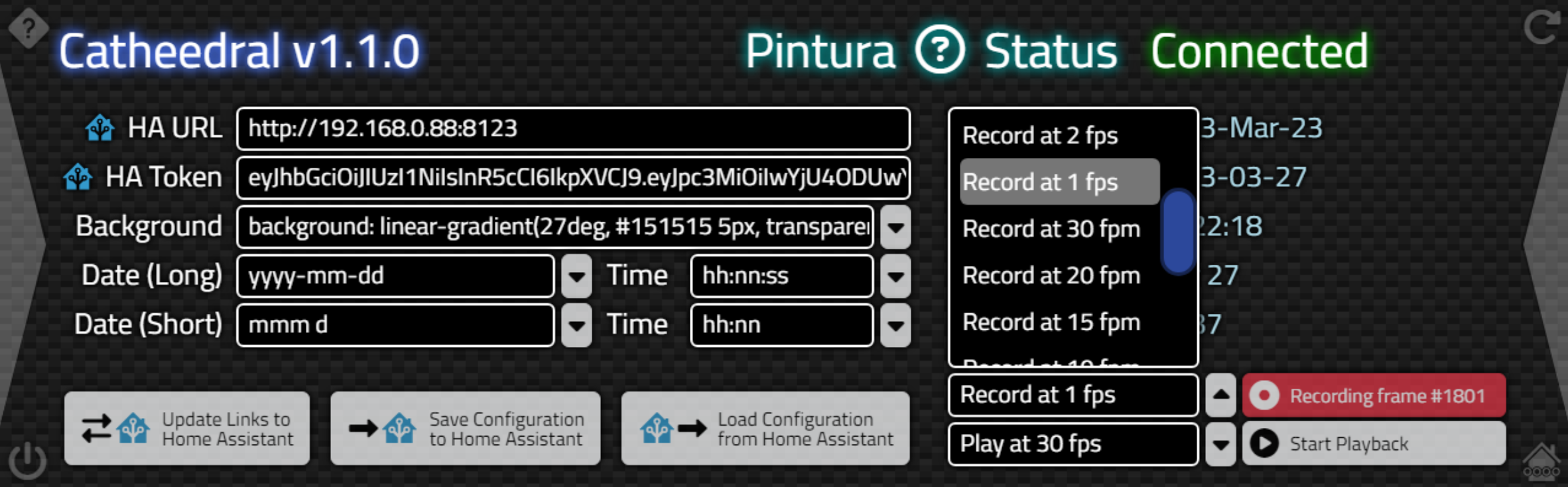

Capture UI.

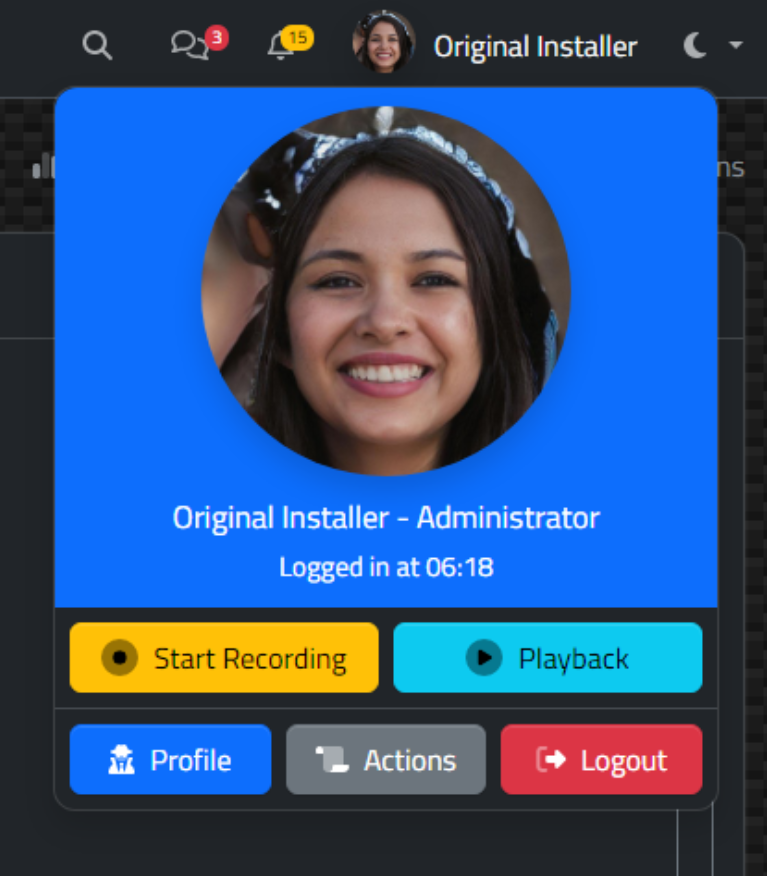

In order to give users a bit of control over this process, if they are to be involved at all, one approach

might be to set up a pair of buttons - one to start/stop recording and one to start playback. And maybe a pair

of options - one for the recording rate and one for the playback rate. Here's what it looks like in the Catheedral UI -

just two buttons and two lists.

Recording / Playback Interface.

For recording, this is just a toggle button to start and stop recording frames. Each new recording erases the

previous recording. What we're really after here is just setting the TWebTimer interval to determine how

often the frames are captured. There are a few other odds and ends thrown in as well. Such as the

aforementioned "value" vs. Text property situation. And a bit of work to change the styles for the buttons to

indicate something is going on.

procedure TForm1.btnRecordClick(Sender: TObject);

var

RecordRate: Integer;

FrameCount: Integer;

begin

if btnRecord.tag = 0 then

begin

btnRecord.Tag := 1;

// Default 1 fps

RecordRate := 1000;

if pos('fps', editConfigRecordRate.Text) > 0

then RecordRate := 1000 div StrToInt(Trim(Copy(editConfigRecordRate.Text,11,2)));

if pos('fpm', editConfigRecordRate.Text) > 0

then RecordRate := 60000 div StrToInt(Trim(Copy(editConfigRecordRate.Text,11,2)));

tmrCapture.Interval := RecordRate;

tmrCapture.Enabled := True;

// Start with fresh capture

asm this.CaptureData = []; end;

// Set <input>.value so it gets rendered

editConfigURL.ElementHandle.setAttribute('value',editConfigURL.Text);

editConfigToken.ElementHandle.setAttribute('value',editConfigTOKEN.Text);

editConfigBackground.ElementHandle.setAttribute('value',editConfigBACKGROUND.Text);

editConfigSHORTDATE.ElementHandle.setAttribute('value',editConfigSHORTDATE.Text);

editConfigLONGDATE.ElementHandle.setAttribute('value',editConfigLONGDATE.Text);

editConfigSHORTTIME.ElementHandle.setAttribute('value',editConfigSHORTTIME.Text);

editConfigLONGTIME.ElementHandle.setAttribute('value',editConfigLONGTIME.Text);

editConfigRecordRate.ElementHandle.setAttribute('value',editConfigRecordRate.Text);

editConfigPlaybackRate.ElementHandle.setAttribute('value',editConfigPlaybackRate.Text);

// Configure Record button as recording

btnRecord.ElementClassName := StringReplace(btnRecord.ElementClassName,'btn-light','btn-danger',[]);

btnRecord.Caption := '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-dot fa-fw ps-1 me-2 fa-xl"></i>Recording...</div>';

// Reset Playback button

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-danger','btn-light',[]);

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-warning','btn-light',[]);

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-primary','btn-light',[]);

btnPlayback.Caption := '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-play fa-fw ps-1 me-2 fa-xl"></i>Start Playback</div>';

end

else

begin

btnRecord.Tag := 0;

tmrCapture.Enabled := False;

asm

await sleep(1000);

FrameCount = pas.Unit1.Form1.CaptureData.length;

end;

// Reset Record Button

btnRecord.ElementClassName := StringReplace(btnRecord.ElementClassName,'btn-danger','btn-light',[]);

btnRecord.Caption := '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-dot fa-fw ps-1 me-2 fa-xl"></i>Recorded '+IntToStr(FrameCount)+' frames</div>';

end;

end;Clicking on the button, then, will kick off the TWebTimer (tmrCapture) and start appending images to the CaptureData array, setting various buttons and properties along the way. A frame counter shows how many frames have been captured, and a limit has been set in the timer to stop at a certain point. 1,800 images correspond to 30 minutes if recording at 1 frame per second, or more than a day if recording at 1 frame per minute.

What

this boils down to in terms of the final video, though, is determined by the playback rate, which is set separately. For

example, recording 1 fps for 30 minutes will generate 1 minute of video if played back at 30 frames per second.

Recording 1 fpm for 1,800 minutes (30 hours) will also generate 1 minute of video if played back at 30 frames per

second. We'll get to the playback button in a little bit. But now we've got perhaps as many as 1,800

images. What next?

FFmpeg.

For those not familiar, a brief introduction to FFmpeg is in order. The name comes from "Fast-Forward mpeg" (from Wikipedia), a nod to MPEG (Motion Pictures Experts Group) - those responsible for a great many of the video and audio coding and compression file formats and algorithms that are in use today. FFmpeg has been around for more than 20 years now and is perhaps most commonly used as a command-line tool for manipulating or otherwise working with audio and video files of all kinds, especially when it comes to compressing or converting data from a raw and uncompressed source into a more efficient and smaller compressed form.

With its broad support of video and audio codecs, and so many command-line options available to perform assorted tasks, it can often be found lurking within other projects. Mostly, it is used to perform all the heavy lifting tasks often required when working with these kinds of files. Like we're going to be doing here in just a moment.

Because of the heavy processing cost of some of its main tasks, like converting codecs or processing incoming

video in real-time, there may well be hardware-accelerated support for many of its functions, while it

maintains the ability to do most everything in software if those supports are not available. This makes it very

flexible and also potentially very performant in suitably equipped environments, and is perhaps a key to its

longevity as well as its performance.

FFmpeg.wasm.

Moving to a web environment presents a few unique challenges for FFmpeg, largely due to both a lack of this kind of hardware support in many browser environments and a need for low-level and high-performance code to be able to complete encoding tasks in a reasonable amount of time (seconds instead of hours). So a straight JavaScript port was not really an option. Instead, the FFmpeg folks came up with FFmpeg.wasm - a WebAssembly version of FFmepg that does a fantastic job of replicating the command-line environment of FFmpeg in a way that we can access through JavaScript. This is what we're going to be working with.

And what is WebAssembly (wasm)? Another topic worth exploring perhaps. Briefly, WebAssembly is kind of like a

virtual machine, with its own instruction set, binary formats and so on that allow applications to be compiled

into something that can be run at near-native speeds in many environments. I know, that kind of sounds like

some other technologies, doesn't it? In any event, the end result is that an application compiled into

WebAssembly has its own sandbox to run in, and even supports features like multi-threading - not something

JavaScript will be offering anytime soon. So it is a great candidate for image and video processing code where

near-native speed is critically important.

There are a lot of interesting implications that arise out of WebAssembly. For example, it is possible to

compile many languages into WebAssembly, including Pascal and now even JavaScript. Perhaps in the not-too-distant future, web applications will routinely be packaged up in WebAssembly containers of some kind, and

JavaScript's many limitations (like the lack of multi-threading) will force it into a well-deserved retirement.

FFmpeg.FS.

One of the challenges of replicating the command-line environment that FFmpeg is normally used in, though, is file handling. If you've worked with JavaScript and browsers and client files for any amount of time, you might well be aware of the limitations here. Browser sandboxing is likely to thwart any kind of underlying local file access that we completely take for granted in a normal command-line environment. And FFmpeg really likes to work with a lot of files at the same time. Like with what we're doing here - combining hundreds or even thousands of images into a single video file. Imagine if we had to ask the user each time we wanted to download or upload one of those files? Not good.

To get around this, we can make use of the "file system" that FFmpeg.wasm has made available. This is kind of

like a virtual disk, where we can add or remove whatever files we want, and name them whatever we want. We can

then run what might perhaps be familiar FFmpeg commands that read and write these files using normal wildcard

filenames and other command-line parameters that have been in use for so very long. When the FFmpeg work is

done, we can then clean up these files or access the new files we've generated, taking them out of the FFmpeg.FS

environment and putting them to work in our web app.

Image To Video.

This looks a lot more complicated than it really is. When we click on the "Playback" button, the gist of what we're going to do is the following.

- Figure out the playback rate as an "fps" value - might be very different from the capture rate.

- Configure some buttons to show what we're doing.

- Initialize FFmpeg.wasm and its dependencies.

- Load our image array into FFmpeg.FS storage.

- Run the FFmpeg command to combine the images into one video file.

- Extract the resulting video from FFmpeg.FS storage.

- Clear out our images from FFmpeg.FS storage when we're done.

- Set up a video element on the page to playback the newly created video.

Here's the code. Let's have a look at how this works. First, If we were recording, we'll need to stop so that

new images aren't generated after the fact. And then, if we've got data, we can figure out what framerate we're

going to use and set our two buttons to show what we're up to.

procedure TForm1.btnPlaybackClick(Sender: TObject);

var

FrameCount: Integer;

begin

// If recording, stop

if btnRecord.Tag = 1 then

begin

await(btnRecordClick(Sender));

end;

asm FrameCount = pas.Unit1.Form1.CaptureData.length; end;

if FrameCount = 0 then

begin

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-light','btn-warning',[]);

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-danger','btn-warning',[]);

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-primary','btn-warning',[]);

btnPlayback.Caption := '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-play fa-fw fa-xl ps-1 me-2"></i>No Data Recorded</div>';

end

else

begin

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-light','btn-danger',[]);

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-warning','btn-danger',[]);

btnPlayback.ElementClassName := StringReplace(btnPlayback.ElementClassName,'btn-primary','btn-danger',[]);

PlaybackRate := 1.0; //fps

if pos('fps', editConfigPlaybackRate.Text) > 0

then PlaybackRate := StrToFloat(Trim(Copy(editConfigPlaybackRate.Text,9,2)));

if pos('fpm', editConfigPlaybackRate.Text) > 0

then PlaybackRate := StrToFloat(Trim(Copy(editConfigPlaybackRate.Text,9,2))) / 60.0;

if PlayBackRate >= 1.0

then btnPlayback.Caption := '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-play fa-fw fa-xl ps-1 me-2"></i>Play '+IntToStr(FrameCount)+'f @ '+FloatToStrF(PlaybackRate,ffNumber,5,0)+' fps<i id="ffprogress" class="fa-solid fa-xl" style="margin: 0px 8px 0px auto;"></i></div>'

else btnPlayback.Caption := '<div class="d-flex align-items-center justify-content-start"><i class="fa-solid fa-circle-play fa-fw fa-xl ps-1 me-2"></i>Play '+IntToStr(FrameCount)+'f @ '+FloatToStrF(PlaybackRate,ffNumber,5,4)+' fps<i id="ffprogress" class="fa-solid fa-xl" style="margin: 0px 8px 0px auto;"></i></div>';Next, we'll have to set up FFmpeg. This code was adapted from here. The main thing here is that the corePath is pointing at a location that allows FFmpeg to download the rest of its code - the actual WebAssembly binary application. The "log" option is used to add entries to console.log that indicate what it is doing, in some detail. Once the instance is defined, FFmpeg is then initialized.

asm

// Load and initialize ffmpeg

const { createFFmpeg, fetchFile } = FFmpeg;

const ffmpeg = createFFmpeg({

log: true,

mainName: 'main',

corePath: 'https://cdn.jsdelivr.net/npm/@ffmpeg/core-st@0.11.1/dist/ffmpeg-core.js'

});

await ffmpeg.load();Here, we're defining the function we're going to be using to convert our CaptureData images into the binary PNG files that FFmpeg works with (attribution). Also, we can output some progress information to the console. Unfortunately, we can't really do much else with this information as the version of FFmpeg we're using here is the single-threaded version, meaning any updates to the page are temporarily blocked. More on that in a moment.

function dataURLtoFile(dataurl, filename) {

var arr = dataurl.split(","),

mime = arr[0].match(/:(.*?);/)[1],

bstr = atob(arr[1]),

n = bstr.length,

u8arr = new Uint8Array(n);

while (n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new File([u8arr], filename, { type: mime });

}

ffmpeg.setProgress(async function({ ratio }) {

console.log('FFmpeg Progress: '+ratio);

});

Here is the main JavaScript function that does the conversion. It is an async function, so our

btnPlaybackClick() method needs to be marked as [async] as well. We start by adding each of our captured images

to FFmpeg's filesystem and assigning a sequential filename. Then we'll change our icon to a spinning progress indicator which curiously does not stop spinning, even though other page updates are not processed while FFmpeg is running.

const image2video = async () => {

// This is the icon at the end of the Playback button

ffprogress.classList.add('fa-file-circle-plus');

// Add image files to ffmpeg internal filesystem

FrameCount = pas.Unit1.Form1.CaptureData.length;

for (let i = 0; i < FrameCount; i += 1) {

const num = `000${i}`.slice(-4);

ffmpeg.FS('writeFile', 'tmp.'+num+'.png', await fetchFile(dataURLtoFile(pas.Unit1.Form1.CaptureData[i],'frame-'+i+'.png')));

}

// Done with files, lets start with the notch

ffprogress.classList.remove('fa-file-circle-plus');

ffprogress.classList.add('fa-circle-notch', 'fa-spin');

await sleep(50);The core of what we're after is right here - the equivalent FFmpeg command that we'd normally run in a command-line environment. We're telling it what framerate we want to assign to the images we're adding. This is different than other FFmpeg commands where we can adjust the fps or other aspects of the resulting video. Then we tell it how to find the files we want to use, along with some information about the video format that we're after. It is entirely possible to pick a video codec that can't be played in the browser we're running in. When it is done, it will have produced a new video file in "out.mp4", which we'll want to get back out of that file system.

// Perform the conversion the conversion

await ffmpeg.run('-framerate', String(pas.Unit1.Form1.PlayBackRate), '-pattern_type', 'glob', '-i', '*.png','-c:v', 'libx264', '-pix_fmt', 'yuv420p', 'out.mp4');

// Get the resulting video file

const data = ffmpeg.FS('readFile', 'out.mp4');

Here we're doing a little housekeeping, first by cleaning out all those files that we copied into the

filesystem, and then by unloading FFmpeg entirely.

// Done with the notch, back to the files

ffprogress.classList.remove('fa-circle-notch','fa-spin');

ffprogress.classList.add('fa-file-circle-minus');

// Delete image files from ffmpeg internal filesystem

for (let i = 0; i < FrameCount; i += 1) {

const num = `000${i}`.slice(-4);

ffmpeg.FS('unlink', 'tmp.'+num+'.png');

}

ffmpeg.exit;

Now we have our video in "data" and need to do something with it. We create a new <video> element

in our page and position it to take up the entire page, overlaying the Catheedral app completely, including its

buttons. We've also got a "double-click" event to close the video and delete it from the page when we're

done with it. That's a bit tricky because browsers don't normally take kindly to this sort of thing, so we're

careful to stop the video and unload it before removing it entirely. The video player is initially set to play

and loop automatically. It has its own "download" button if we want to save the video for posterity - no need for us to do anything there.

// Create a place to show the video

const video = document.createElement('video');

// Load the video file into the page element

video.src = URL.createObjectURL(new Blob([data.buffer], { type: 'video/mp4' }));

// Set some attributes

video.id = "video";

video.controls = true;

video.loop = true;

// Set some styles

video.style.setProperty('position','fixed');

video.style.setProperty('left','0px');

video.style.setProperty('top','0px');

video.style.setProperty('width','1280px');

video.style.setProperty('height','400px');

video.style.setProperty('z-index','1000');

// Add to the page

document.body.appendChild(video);

// Load and start playing the video

video.load();

video.play();

// Wait for a double-click to unload the video

video.addEventListener('dblclick',function() {

video.pause();

video.removeAttribute('src');

video.load();

video.src = '';

video.srcObject = null;

video.remove()

});

// Set our buttons to indicate the final state

btnPlayback.classList.replace('btn-light','btn-primary');

btnPlayback.classList.replace('btn-warning','btn-primary');

btnPlayback.classList.replace('btn-danger','btn-primary');

ffprogress.classList.remove('fa-file-circle-minus');

}

image2video();

end;

end;

end;There's no audio here, so not really an issue with the video playing automatically. Though it is kind of funny if it is set to record for a while and you forget, as the Catheedral interface is then shown in a looping video, indistinguishable from the normal Catheedral interface other than the fact that the clock is not showing the correct time or moving at the expected speed. Perhaps I'll change the main Configuration button to red or something to indicate that a recording is in progress, which will then also be red when played back. Here's a one-minute video downloaded after recording for 30 minutes at 1 frame per second, set to playback at 30 frames per second. The resulting video file is 1.7 MB.

Catheedral Time-lapse.

This all works pretty well, and for short video recordings, the video generation step only takes a few seconds.

For the full 1,800 frames though, it does take a couple of minutes. And this is likely going to take a lot

longer running on a less capable system like a Raspberry Pi.

A progress indicator of some kind would be helpful. What we've done in the above code is just add a Font Awesome spinner icon, and set it spinning while FFmpeg goes about its business. There's also a progress event included in the above, but all it is doing is logging the ratio of the current task to the console.

The version

of FFmpeg we're using here is the single-threaded version. This means that even if we added more code in that

event handler to update our page in some way, nothing is going to happen - the page (and JavaScript code accessing the page) is blocked while the

long-running task is doing its thing. This is also evident in that the "system time" on the page stops

updating. Fortunately, our Font Awesome icon keeps spinning, so there's that at least.

A possible way around this is to use the multi-threaded version of FFmpeg. There are quite a few more

requirements for that, however, and much of it has to do with CORS. In order for multi-threading to work, it needs access to a "SharedArrayBuffer". In the FFmpeg.wasm documentation, there's this

little bit of information.

SharedArrayBuffer is only available to pages that are cross-origin isolated. So you need to host your own server withCross-Origin-Embedder-Policy: require-corpandCross-Origin-Opener-Policy: same-originheaders to use ffmpeg.wasm.

And if you want to read more on what that is all about, they offer this link

to help explain why all this is necessary. Spoiler alert: Spectre. This is a bit more work than we'd like to

do at the moment, with little to no ROI on that particular task, but something to keep in mind if you're

building something more substantial, or if you plan on running larger FFmpeg tasks and want to take advantage of multithreading.

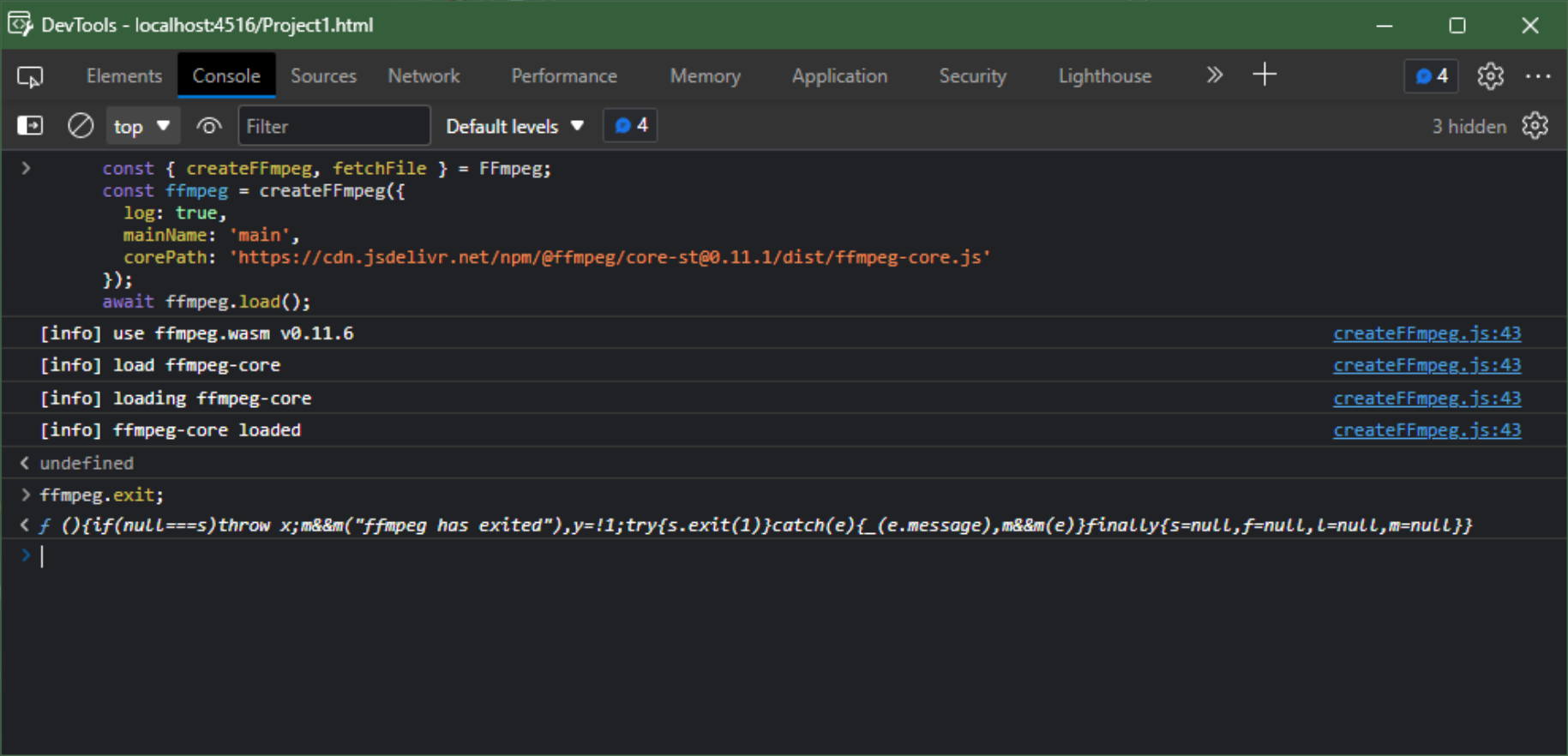

If you're going to test those versions, it might also help to see what is going on in more detail. With

everything loaded up in Project.html, you can test whether FFmpeg.wasm is ready to go by running the same

initialization code in the console. For example, we can just copy and paste the initialization code above into

the browser console like this.

FFmpeg.wasm Initialization.

If anything is amiss, this should indicate where the problem is. For example, if there's a typo in "corePath"

or something like that, it won't be able to load ffmpeg-core and you'll get an error about that with some

details. Note that the files that are being loaded here are a little larger than the JavaScript libraries we

normally work with - the main ffmpeg.wasm file is around 8.5 MB for example - huge by normal JavaScript

standards. If you try to use the multi-threaded version of FFmpeg.wasm without meeting all the criteria, you'll

likely run into a SharedArrayBuffer error of some kind or other. And also be mindful of the revisions,

particularly if you use different CDNs.

Another Example.

If we get rid of all the UI elements and various options, the whole process is actually not all that

complicated. So let's have a quick look at another example. The Template

project has been coming along nicely. Let's add the ability to record a session to that project. We'll

start by adding a pair of buttons to the User Menu, allowing us to start/stop recording and then play back the

results.

Buttons for Recording Sessions.

As we had previously, we'll need a timer event. In this case, we'll want to show only the part of the page that

is visible, so we'll limit the size. Also, FFmpeg requires dimensions to be even numbered. Some fancy JavaScript code is used to convert the frame count into an HH:nn:ss display at the top of the page with the same icon as the Record button.

procedure TMainForm.tmrCaptureTimer(Sender: TObject);

begin

asm

// Maximum capture - 600 frames (10m @ 1 fps)

if (pas.UnitMain.MainForm.CaptureData.length < 600) {

modernScreenshot.domToPng(document.querySelector('#divHost'), {scale:1.0, width: Math.ceil(window.innerWidth / 2) * 2, height: Math.ceil(window.innerHeight / 2) * 2 }).then(dataURI => {

console.image(dataURI,0.5);

pas.UnitMain.MainForm.CaptureData.push(dataURI);

var icon = pas.UnitIcons.DMIcons.Lookup;

var rectime = new Date(1000 * pas.UnitMain.MainForm.CaptureData.length).toISOString().substr(11, 8);

btnRecording.innerHTML = icon['Record']+rectime;

});

}

end;

end;For the Record button, we're doing the same sort of thing we did earlier - starting a timer. But in this case, we're just using a 1 fps value - the timer interval is set to 1000 without any option to change it, as it isn't really needed here.

procedure TMainForm.RecordSession;

begin

if RecordingSession = False then

begin

RecordingSession := True;

asm

var Icon = pas.UnitIcons.DMIcons.Lookup;

btnRecording.innerHTML = Icon['Record']+'00:00:00';

btnRecord.innerHTML = Icon['Record']+'Stop Recording';

btnRecording.classList.remove('d-none');

pas.UnitMain.MainForm.CaptureData = [];

end;

MainForm.tmrCapture.Enabled := True;

end

else

begin

RecordingSession := False;

asm

var Icon = pas.UnitIcons.DMIcons.Lookup;

btnRecording.classList.add('d-none');

btnRecord.innerHTML = Icon['Record']+'Start Recording';

end;

MainForm.tmrCapture.Enabled := False;

end;

end;

For Playback, we're also doing the same thing, but without all the extra stuff. And playback is also 1 fps. Nice

and simple.

procedure TMainForm.PlaybackSession;

var

FrameCount: Integer;

PlaybackRate: Double;

begin

asm FrameCount = pas.UnitMain.MainForm.CaptureData.length; end;

if FrameCount = 0 then

begin

// Nothing to do

end

else

begin

PlaybackRate := 1;

asm

// Load and initialize ffmpeg

const { createFFmpeg, fetchFile } = FFmpeg;

const ffmpeg = createFFmpeg({

log: true,

mainName: 'main',

corePath: 'https://cdn.jsdelivr.net/npm/@ffmpeg/core-st@0.11.1/dist/ffmpeg-core.js'

});

await ffmpeg.load();

function dataURLtoFile(dataurl, filename) {

var arr = dataurl.split(","),

mime = arr[0].match(/:(.*?);/)[1],

bstr = atob(arr[1]),

n = bstr.length,

u8arr = new Uint8Array(n);

while (n--) {

u8arr[n] = bstr.charCodeAt(n);

}

return new File([u8arr], filename, { type: mime });

}

ffmpeg.setProgress(async function({ ratio }) {

console.log('FFmpeg Progress: '+ratio);

});

const image2video = async () => {

// Add image files to ffmpeg internal filesystem

FrameCount = pas.UnitMain.MainForm.CaptureData.length;

for (let i = 0; i < FrameCount; i += 1) {

const num = `000${i}`.slice(-4);

ffmpeg.FS('writeFile', 'tmp.'+num+'.png', await fetchFile(dataURLtoFile(pas.UnitMain.MainForm.CaptureData[i],'frame-'+i+'.png')));

}

// Perform the conversion the conversion

await ffmpeg.run('-framerate', String(PlaybackRate), '-pattern_type', 'glob', '-i', '*.png','-c:v', 'libx264', '-pix_fmt', 'yuv420p', 'out.mp4');

// Get the resulting video file

const data = ffmpeg.FS('readFile', 'out.mp4');

// Delete image files from ffmpeg internal filesystem

for (let i = 0; i < FrameCount; i += 1) {

const num = `000${i}`.slice(-4);

ffmpeg.FS('unlink', 'tmp.'+num+'.png');

}

ffmpeg.exit;

// Create a place to show the video

const video = document.createElement('video');

// Load the video file into the page element

video.src = URL.createObjectURL(new Blob([data.buffer], { type: 'video/mp4' }));

// Set some attributes

video.id = "video";

video.controls = true;

video.loop = true;

// Set some styles

video.style.setProperty('position','fixed');

video.style.setProperty('left','0px');

video.style.setProperty('top','0px');

video.style.setProperty('width','100%');

video.style.setProperty('height','100%');

video.style.setProperty('z-index','1000000');

// Add to the page

document.body.appendChild(video);

// Load and start playing the video

video.load();

video.play();

// Wait for a double-click to unload the video

video.addEventListener('dblclick',function() {

video.pause();

video.removeAttribute('src');

video.load();

video.src = '';

video.srcObject = null;

video.remove()

});

}

image2video();

end;

end;

end;

And that's about it. We can give the user the ability to record sessions in case they want to document

something or report an issue of some kind. A future update will provide an option to send the resulting video

as part of a trouble ticket in case there's an issue that needs some kind of follow-up. Here's an example of

what the video looks like.

Template Time-Lapse.

This went a little more smoothly than the Catheedral implementation, but there are still a few little things

that will need more attention.

- While the video above looks ok, this was taken using Microsoft Edge. When using Google Chrome, the text of the light-colored buttons doesn't change to black for some reason, making them very difficult to read.

- As we're just showing the part of the page that is visible (the top part), it doesn't factor in scrolling. There are options for this that I've not yet tested - to scroll the virtual page being captured to be in the same place as the current page scroll value. There is also the option to capture the entire page, but this doesn't work so well when pages are different sizes.

- Scrollbars in general don't match the styling of what is on the page, but this is currently a limitation of this JavaScript library - not much we can do about it at the moment.

Overall though, this works pretty well and could do a reasonable job of helping report problems or documenting

how to perform a certain function, which is all we're after in this case.

Other Ideas.

While this is workable for both examples, there are many ways this could be expanded or improved upon. Here are some ideas.

- We could capture the mouse pointer location (potentially at a much higher frequency than the image capture) and then overlay a mouse pointer image on each screenshot image (duplicating them if we need a higher framerate to show the mouse movement) as we build the video. This is easily within FFmpeg's capabilities.

- Likewise, we could add a watermark or other information, like a timecode, to the video, or process it in any

number of ways in terms of compression or streaming optimizations.

- FFmpeg has many features that can be used to shorten video in various ways, including removing duplicate frames, black or white frames, or frames with very little content.

- WebWorkers could be used to help improve the overall performance of the capturing process. We could also filter out elements we don't need, like perhaps common headers or footers.

- If your project is a kiosk app, this mechanism could be used to capture screenshots or video periodically for monitoring or auditing purposes, without user intervention of any kind.

But perhaps most interesting of all, the fact that we now have easy access to everything that FFmpeg offers

means that we can create all kinds of apps that might not be so easy without FFmpeg. While this is nowhere near

what we would need for a full-blown video editing (aka non-linear editing, or NLE) app, we can still create a lot of useful functionality.

- Create web apps for transcoding videos from one format to another.

- Combine audio and video streams in different ways, perhaps changing the speed of one to match the other, for

example.

- Extract audio or images from existing videos. FFmpeg is already pretty good at extracting thumbnails using many complex criteria.

- Create an app for trimming videos or splicing together different videos, maybe even from different sources or with different formats.

- Alter the dimensions of a video or make various other changes, like watermarking overlays, or other frame-level processing tasks.

But I think that about covers it for now. What do you think? Is this something you can find a use for in your

projects? What other kinds of apps come to mind?

Link to GitHub

repository for Catheedral

Link to

GitHub repository for Template

Follow Andrew on 𝕏 at @WebCoreAndMore or join our 𝕏 Web Core and More Community.

Andrew Simard

This blog post has not received any comments yet.

All Blog Posts | Next Post | Previous Post